Background

I wrangle data from several environments in an inventory project. One of the newest environments is a SQL database that contains a ticketing system’s data. Rather than use a foreach() method that “upserts” and deletes SQL items Row-By-Arduous-Row, I sought a new strategy that utilized SQL’s powerful JOIN operators. This approach has played out extremely well on the enterprise level, and I want to share what I’ve learned about the PowerShell side of the operation. Comments and questions are encouraged—iron sharpens iron.

Insights

The goal in the code is to map a DataTable object to a single SQLCommand parameter. It is achieved in the following line.

$manageTargetTable.Parameters.Add([SqlParameter]::new("@dt",[SqlDbType].Structured)).Value = $SalesOrderDetails;

The @dt SQL parameter is typed as a SQL User-Defined Table Type (UDTT), which is declared as a Table-Valued Parameter (TVP) in a SQL stored procedure (a sproc). A DataTable is allowable for mapping to the TVP, which is typed by the [SqlDbType].Structured declaration. I have yet to find a suitable alternative object that can be mapped to the TVP—even a class that extends Data.Datatable. Therefore, I use one class as a blueprint for the table row, and a PowerShell function to store each instance in the DataTable. This strategy has the additional benefit of skipping the need for a SQL temporary (tempdb object) or a persistent SQL staging table.

Some Prerequisites

- Exploration of the source data.

- A SQL table that stores the source data in their final form.

- SQL User-Defined Table Type.

- The UDTT has a primary key to speed queries.

- A SQL Stored Procedure that uses a Table-Value Parameter.

- The sproc executes an

UPDATE,INSERT, andDELETEstatements with joins between the source table (the UDTT) and a target SQL table.

- The sproc executes an

Demo Code

I chose a CSV file as the data source because of the familiarity with working in the format on this forum. It contains 121,317 rows from the AdventureWorks 2014 SQL database. More information is in the Remarks section.

using namespace System.Collections.Generic;

using namespace System.Data;

using namespace System.Data.SqlClient;

using namespace System.IO;

using namespace Microsoft.SqlServer.Management.Smo;

[void][reflection.assembly]::LoadWithPartialName("Microsoft.SqlServer.Smo");

Class SalesOrderDetail

{

[string] $CarrierTrackingNumber;

[float] $LineTotal;

[int] $OrderQty;

[int] $ProductID;

[int] $SalesOrderDetailID;

[int] $SalesOrderID;

[int] $SpecialOfferID;

[decimal] $UnitPrice;

[double] $UnitPriceDiscount;

[string] $HashID;

SalesOrderDetail(){} # an empty default ctor.

SalesOrderDetail([string]$trackNbr,[float]$lineTot,[int]$quantity,[int]$prodId,[int]$detailId,[int]$orderId,[int]$offerId,[decimal]$price,[decimal]$discountPrice)

{

$this.CarrierTrackingNumber = $trackNbr;

$this.LineTotal = $lineTot;

$this.OrderQty = $quantity;

$this.ProductID = $prodId;

$this.SalesOrderDetailID = $detailId;

$this.SalesOrderID = $orderId;

$this.SpecialOfferID = $offerId;

$this.UnitPrice = $price;

$this.UnitPriceDiscount = $discountPrice;

$this.HashID = $this.GenerateHash(($trackNbr,$lineTot,$quantity,$price,$discountPrice)-join'');

}

[string] GenerateHash([string] $p1)

{

$paramStream = [MemoryStream]::new();

$streamWriter = [StreamWriter]::new($paramStream);

$streamWriter.write($p1);

$streamWriter.Flush();

$paramStream.Position = 0;

return Get-FileHash -InputStream $paramStream -Algorithm MD5 | Select-Object -ExpandProperty Hash;

}

}

$SalesOrderDetails = [DataTable]::new("SalesOrderDetails","Inventory")

[void]$SalesOrderDetails.Columns.Add("CarrierTrackingNumber",[string]);

[void]$SalesOrderDetails.Columns.Add("LineTotal",[float]);

[void]$SalesOrderDetails.Columns.Add("OrderQty",[int]);

[void]$SalesOrderDetails.Columns.Add("ProductID",[int]);

[void]$SalesOrderDetails.Columns.Add("SalesOrderDetailID",[int]);

[void]$SalesOrderDetails.Columns.Add("SalesOrderID",[int]);

[void]$SalesOrderDetails.Columns.Add("SpecialOfferID",[int]);

[void]$SalesOrderDetails.Columns.Add("UnitPrice",[decimal]);

[void]$SalesOrderDetails.Columns.Add("UnitPriceDiscount",[decimal]);

[void]$SalesOrderDetails.Columns.Add("HashID",[string]);

$SalesOrderDetails.PrimaryKey = $SalesOrderDetails.Columns["SalesOrderID","SalesOrderDetailID"];

function Add-SalesOrderDetail([SalesOrderDetail] $item)

{

$row = $SalesOrderDetails.NewRow()

foreach($i in $item)

{

$row.CarrierTrackingNumber = $item.CarrierTrackingNumber;

$row.LineTotal = $item.LineTotal;

$row.OrderQty = $item.OrderQty;

$row.ProductID = $item.ProductID;

$row.SalesOrderID = $item.SalesOrderID;

$row.SalesOrderDetailID = $item.SalesOrderDetailID;

$row.SpecialOfferID = $item.SpecialOfferID;

$row.UnitPrice = $item.UnitPrice;

$row.UnitPriceDiscount = $item.UnitPriceDiscount;

$row.HashID = $item.HashID;

}

$SalesOrderDetails.Rows.Add($row)

}

$source = (Import-Csv A:\SalesOrderDetail.csv -Header 'SalesOrderID','SalesOrderDetailID','CarrierTrackingNumber','OrderQty','ProductID','SpecialOfferID','UnitPrice','UnitPriceDiscount','LineTotal');

foreach($i in $source)

{

$order = [SalesOrderDetail]::new($i.CarrierTrackingNumber,$i.LineTotal,$i.OrderQty,$i.ProductID,$i.SalesOrderDetailID,$i.SalesOrderID,$i.SpecialOfferID,$i.UnitPrice,$i.UnitPriceDiscount)

Add-SalesOrderDetail($order);

}

[Server]$targetServer = "MySQLServerName";

[Database]$targetDatabase = $targetServer.Databases.Item("MySQLDatabase");

$targetConnection = [SQLConnection]::new("Server=$($targetServer.Name); Database=$($targetDatabase.Name); Integrated Security=true");

$targetConnection.Open()

$manageTargetTable = [SqlCommand]::new("Inventory.ManageSalesOrderDetails",$targetConnection);

$manageTargetTable.CommandType = [CommandType]::StoredProcedure;

$manageTargetTable.Parameters.Add([SqlParameter]::new("@dt",[SqlDbType].Structured)).Value = $SalesOrderDetails;

[void]$manageTargetTable.ExecuteNonQuery(); #void b/c my sql sprocs return a 0 when successful.

$targetConnection.Close()

return

Results

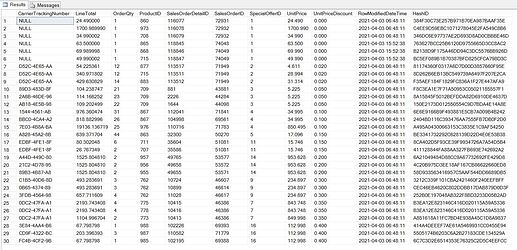

The result set is the first 3 rows for each UnitPriceDiscount by RowModifiedDate. The data are unmodified from the AdventureWorks data except that I use decimal(9,3) on the two Unit~ elements. I withheld some rows from the initial insert to provide different RowModifiedDate values—some of which are returned—later.

Remarks

- Data exploration is “homework” that allows for better modeling and architectures downstream.

- I put most elements in alphabetical order to keep track of them as I move system-to-system and language-to-language.

- The primary key on the

DataTableis used for source data validation. HashIDis used in the sproc to determine if one or more changes have occurred in a subset of the tuple members.- (SQL results)

RowModifiedDateisSYSUTCDATETIME()by default. - (SQL results) I do not use the

moneySQL datatype; I format the decimal values to currency (and also percentages) in SQL views after calculations or aggregations.